Facebook’s other problem: How the company blocks the spread of legitimate stories

by: Daphne Marvel

posted on Monday, July 16, 2018

How to describe Facebook precisely — a social media network for staying connected with your friends? A news publisher? A propagator of false news? A threat to democracy? The social giant has been deemed many things since its inception nearly 15 years ago. In a way, the platform defies classification. And, understandably, it’s challenging to neatly categorize something that is so new to us as a society. However, it is undeniable that Facebook is one of the architects of the digital landscape as we know it now.

With its role as an online gatekeeper, the platform has shown it has powerful influence over what information we receive — or don’t receive — about a range of topics, with major implications for public opinion and policymaking. After being widely criticized for becoming an amplifier of fake news and being required by the European Union to tighten its data and privacy rules, the social media giant has made sweeping changes to its policies and practices. But in its effort to keep false content from getting through, does Facebook, at times, swing too far in the other direction?

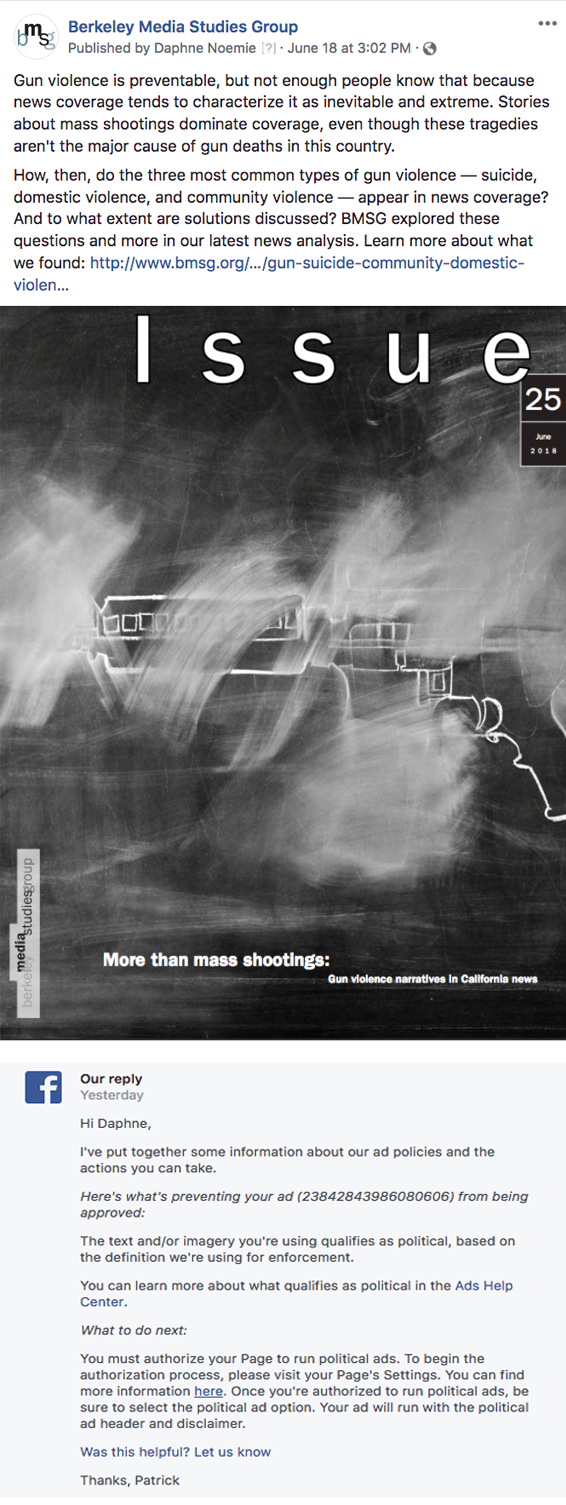

Recently, BMSG published a Facebook post about our latest study: “More Than Mass Shootings: Gun Violence Narratives in California News.” Our findings shed light on several underreported forms of gun violence, filling an important research gap. To reach a wider audience, we promoted it on Facebook using the platform’s advertising function, which allows organizations to “boost” posts by paying Facebook to disseminate the content to a broader swath of people on the platform. We expected this to be a straightforward process, involving payment details and the click of a button. However, following Facebook’s review of the post, we received a message that our planned promotion had been rejected because, according to Facebook, our page was “not authorized for ads with political content.”

Recently, BMSG published a Facebook post about our latest study: “More Than Mass Shootings: Gun Violence Narratives in California News.” Our findings shed light on several underreported forms of gun violence, filling an important research gap. To reach a wider audience, we promoted it on Facebook using the platform’s advertising function, which allows organizations to “boost” posts by paying Facebook to disseminate the content to a broader swath of people on the platform. We expected this to be a straightforward process, involving payment details and the click of a button. However, following Facebook’s review of the post, we received a message that our planned promotion had been rejected because, according to Facebook, our page was “not authorized for ads with political content.”

We were surprised at this outcome, as the post was about a BMSG news analysis, and not what we would classify as “political content.” As Facebook did not provide us with much information on why it would not boost our post, we filed an official appeal for a second review. We explained that BMSG is a nonpartisan nonprofit organization and that the post in question was intended to promote the results of our study on how gun violence is covered in California news. And yet, again, we received the same response from Facebook: We could not run the ad. Even though we do not consider our research political, according to the Facebook policy, our ad was categorically political content. To promote the study on Facebook, we would have needed to authorize our page to run political ads, and the post would have then run with a disclaimer stating that it was political content. We chose not to do this because it would have been inaccurate and misleading to our audience.

However, this issue is much bigger than one BMSG promoted post. Other nonprofit organizations have had similar experiences. For example, Voices of Monterey Bay, a nonprofit news site, tried to boost an interview with labor leader Dolores Huerta. However, after the promotion ran for about a day, the post was shut down because Facebook deemed it political. Voices of Monterey Bay has said that it refuses to categorize its content this way.

Facebook, despite its failings and limitations, can be a useful tool for advocates working to shift the dialogue around a public health or social justice issue. Indeed, social media is one of the means through which those engaged in media advocacy can shape the public conversation and eventually effect change. People who wish to reach a broader audience with a real story about health, the environment, poverty, education, or civil rights should be able to promote this content on Facebook. What does it mean for our digital futures that Facebook stops a nonprofit organization from boosting a non-political post about a study on gun violence, or a child care facility from boosting a post about open spots?

Meanwhile, the fight against fake news is far from over, actual political ads are slipping through the net, and the company is increasingly under scrutiny for its role is spreading another form of harmful content: misleading and predatory marketing. For instance, fast food and sugary drink companies can promote their products directly to teenagers on the platform, despite known health risks. Food marketing is one serious obstacle to healthy eating patterns, and food and beverage companies aggressively target communities of color, especially youth, with marketing for foods and drinks that are low in nutrition and high in sugars, salt, and fats. By aligning their marketing practices with the ways that young consumers use social media, one of the ways that food and beverage companies target kids of color is through Facebook.

How, then, should the social media giant determine what content is allowed through and what content gets restricted? How can it allow researchers and advocates to share and discuss important information — even on controversial topics — while simultaneously protecting users from fake news, aggressive marketing, and other damaging content?

Facebook’s screening criteria should not be black-and-white: The company should have a more nuanced process for exercising its responsibility. Facebook has the resources and ability to weed out false news — and more importantly, it has the resources to do so in a responsible way. Content like the BMSG gun violence post and many others that are being rejected by Facebook should be reviewed more closely, because a less cut-and-dried process would allow the platform to make more accurate and informed decisions about which stories make it through its advertisement vetting process.

The implications for Facebook limiting content are broad. They not only apply to nonprofits and advocates, but also to news organizations. Following Facebook’s rejection of its promoted post on immigration policy, Reveal from the Center for Investigative Reporting Tweeted that there’s a difference between political content and “journalistic content that deals with policy.” People have a right to see posts about issues that affect everyone’s lives and well-being.

Facebook is missing a key distinction in its unwavering rules for enforcement: Just because a topic is part of the political conversation, that does not make all content about this topic political. For instance, a news story on the science of climate change is not the same as an opinion piece advocating for a specific environmental policy. With the considerable resources that Facebook has at its disposal, the platform should invest in building internal processes that can make finer distinctions among various types of content.

People’s ability to access accurate, uncensored information is a pillar of democracy. And now more than ever, it is crucial for advocates and reporters to have their voices heard. Supporting these values involves calling on Facebook to take its responsibility seriously and to stop obstructing the dissemination of legitimate information on the issues facing our society today.

Has Facebook censored one of your non-political posts? We want to hear from you! Share your stories or screenshots with us at info@bmsg.org, @BMSG, or on Facebook.